Time for a new NAS

My home server was getting old. The N3150 Celeron CPU had enough grunt to do the routing for my network and the samba shares, but not much left over. The machine had 4 disks in, 3x 250GB 2.5 inch HDDs and one 4TB HDD. One of the laptop disks was the “boot and root” disk. The other two were a small ZFS mirror and the final 4TB was a single big ZFS pool.

I decided to upgrade for a few reasons, but mainly I wanted to expand the storage capacity and add more redundancy. Also, I run a Jellyfin instance and the old server could not use hardware accelerated transcoding, which basically meant when I want to chromecast anything CPU would max out. 2 streams was out of the question.

The final challenge was that I wanted to make the transition as seamless as possible, keeping the old server running until everything on the new box was ready. Also, I wanted to reuse the 4TB disk from the old server in the new, so there was some logistical obstacles to overcome.

Hardware

I decided I wanted another low power CPU, but one with the newer iGPU to support the hardware video acceleration I wanted. The board would ideally have an M.2 2280 slot for the “boot and root”, and enough SATA ports for the drives. Dual lan ports would also be great as the old server was also the router for my system.

In the end I went for a J4125 cpu and the only board available: https://www.asrock.com/mb/Intel/J4125-ITX/

The board has 4x SATA ports, but no M.2 NVMe/SSD slot and only 1 LAN port.

I decided to sacrifice the dedicated LAN in favour of making my VDSL Modem/Router actually do the routing. So far I have not noticed any drop in internet speed or internal network speeds by doing this, and it now actually allows me to turn off the server without dropping our internet connection. I could still (if I wanted) use PPPoE over the single LAN cable and accept that packets would route into the server and then back out over the PPP connection via the same interface, but it’s gigabit and the internet connection is only ~70 Mbps so that shouldn’t be a problem.

The lack of a M2 slot for storage was more of a problem as now 1 of the 4 SATA ports must be used for the boot disk. Now as I get to later, I wanted 4 ports for the NAS drives, so I am one short. In the end I bought a PCIe 2.0 2-port SATA controller card. So I have a total of 6 ports. This means I could add an ARC cache SSD in the future if I wanted.

It’s really the wrong time to be buying HDDs. I decided that in the name of future upgrade-ability I would build a RAID10-esque ZFS array. That is, I would make the zpool out of 2 pairs of mirrored drives. This way if a drive from each array fails, the array remains functional. i.e. 1 drive failure is safe, 2-drive failure might be safe. Also, resilvering one of a mirror is actually much less hard work that resilvering one of a RAIDZ array which again reduces the chance of 2-disk failure.

Still that meant if I wanted to use the existing 4TB drive I would need another 4TB and then any matching pair of drives for the other mirror. In the end I bought a single 4TB Toshiba NAS drive new, and 2 Western Digital Red Pro 4TB drives from ebay (different sellers and different batches!).

Fortunately the Reds had no SMART failures, so I guessed everything was OK.

I bought a nice NAS case (https://www.ipc.in-win.com/soho-smb-iw-ms04) instead of using the rackmount one I had previously. A lovely case and very easy to install the motherboard and wire everything up.

Software

I use ubuntu server for everything, so no surprise I used that here. I installed the base system and little else, set up the SSH access and then everything could be done remotely.

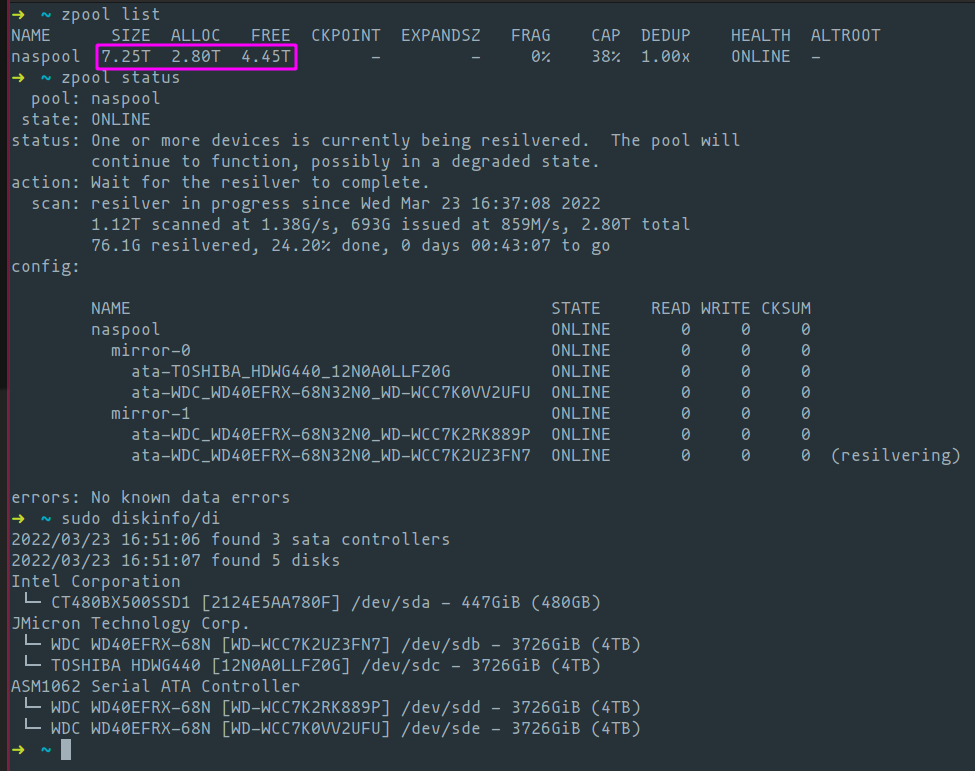

ZFS is awesome and has a great feature called ZFS send/recieve which can be used to send snapshots of a ZFS filesystem to another system. I used this over the network to copy the filesystem from the existing server onto the new one. Remember at this point I only had 3 disks, so the zpool on the new server was a single disk (the Toshiba) and a mirrored pair (the 2 WD Red disks). This created a 7.25TB pool onto which I zfs recv’d the zfs send output. I continued to use the old server but once I was ready to switch over I only had to do an incremental zfs send. The whole process was awesomely simple.

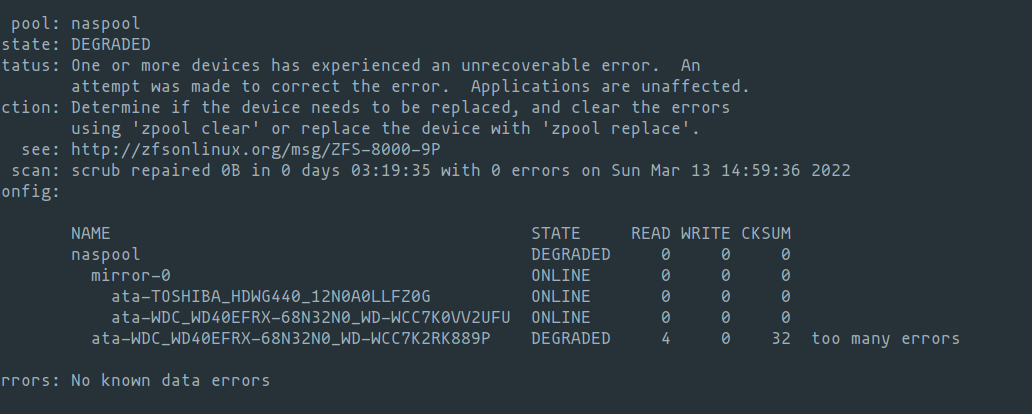

ZFS has another great feature called scrub, which verifies all data on disk against the checksums it keeps for that data. I thought it would be a good way to test that the zfs send worked and that the disks/zpool was ok. However…

That’s right read and checksum errors. I re-ran the process and more and different numbers of error occurred. I was pretty panicked at this point thinking that I had wasted money on bad disks (only the ebay’d WD Reds were showing errors).

However, I had read somewhere that dodgy cabling can case disk read errors. I opened the case, reset all the SATA cables and closed it all up again.

A second scrub and no errors, phew.

Finally, when it was all setup I took out the 4TB drive from the old server and put it in the new one, ran zfs attach to add it to the “single” disk in the zpool and voila:

The resilver process finished quickly enough (and ZFS emailed me to say so). So now I have a 2x 2-way mirrored zpool with 7.25TB of space. The upgrade path requires only replacing 2 disks for larger models (both of a mirror) and the whole pool will expand to fill the available space. If I had gone for RAIDZ1 with 4 disks, I would have had to replace all 4 for an upgrade and would only have been able to tolerate a single drive failure. 50% storage efficiency is the price to pay, but I want this to be a secure place to put my important data (photos!).

And yes, I know RAID is not backup, nor is synchronisation. However keeping my data with the mirrors helps this location and synchronisation helps me keep a copy elsewhere, so it all helps as part of a balanced diet.